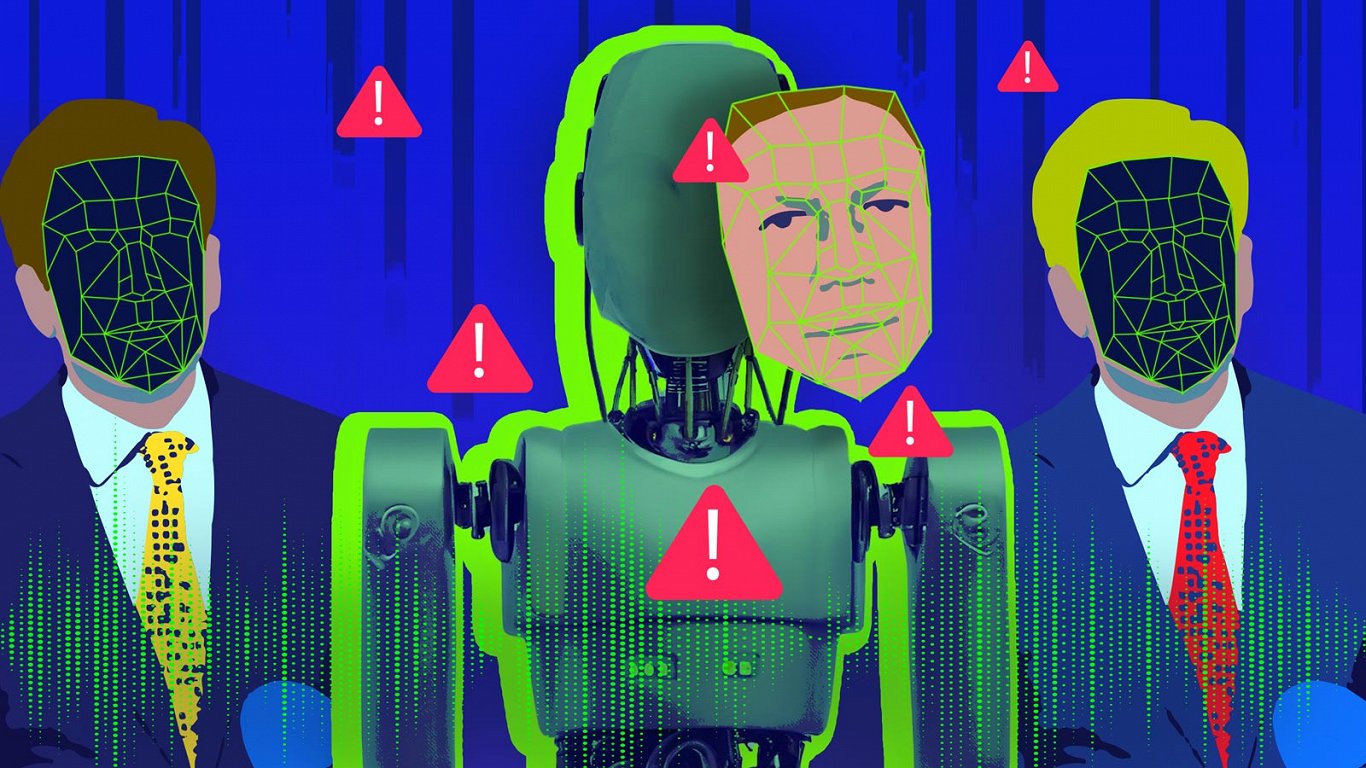

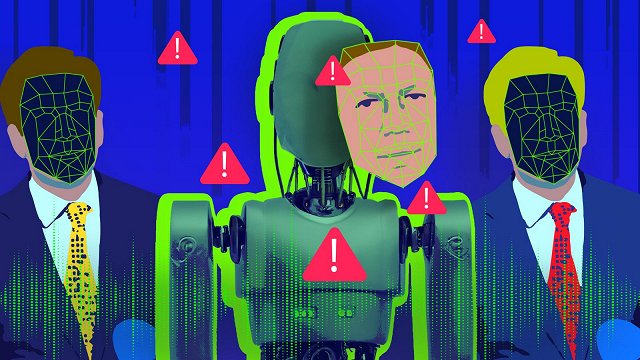

'Deepfake' content is essentially online fakery, often created using Articficial Intelligence tools that can convincingly mimic human faces and voices, creating video, audio and photo footage that looks real but is completely fake.

In August an online survey was conducted by Samsung Electronics Baltics in cooperation with the research agency Norstat. 930 students aged 15 to 19 participated in the survey.

More than half or 53% of young people admit that at least once they have recognized deepfake materials created by artificial intelligence online.

Survey data show that 16% of young people in Latvia have also used deepfake technology themselves with its ready availability and ease of use noted.

The most common type of fakery is an image that uses a person's face to create a fake but very convincing visual. These images are usually distributed on social networks or various platforms, often for misleading or malicious purposes. Image hoaxes are easier to create than videos.

Deepfake videos are technically more sophisticated and use artificial intelligence to reproduce moving images. Faces and public appearances of celebrities or politicians are often faked. A fake can often be recognized by illogical facial movements, inconsistencies between lip movements and voice, as well as visual blurs in video quality. This type of spoofing is often used for entertainment purposes, but it can also be used for misleading purposes, such as spreading false information about someone.

The head of Latvia's Safer Internet Center (Drossinternets.lv), Maija Katkovska, commented that fakes are increasingly used with the aim of harming someone or spreading misinformation: "Unfortunately, in our work, we also increasingly see cases where young people use fake images to increase cyber-mobbing [bullying] against their peers, for example by creating a fake nude photo and distributing it.

"Although the victimized youth knows that it is not their body to which the face is attached, it is still difficult to stop the avalanche of insulting and rude comments that are received. Also, several cases are known here in Europe, when, due to politically motivated payback, fake videos of pornographic content are distributed during election periods in order to undermine the reputation of an opponent."

"Increasingly, fraudsters are using artificial intelligence programs, for example, the voice of the son heard in the video published on social media is imitated in the phone call to the mother, encouraging the urgent transfer of financial funds. Although regulation is being debated at the European level to limit the use of artificial intelligence tools for malicious purposes, we must be ready to respond to this type of content today. Therefore, I recommend using every opportunity for both students and educators to get in-depth information and understand the operation of artificial intelligence tools in order to protect themselves from the risks they create," advised Katkovska.

To recognize a deepfake video, it can help to pay attention to facial movement oddities, such as illogical eye blinks or mouth movements, as well as discrepancies between the lighting on the face and the rest of the image. If facial details are blurred or indistinct, this may indicate a fake. It is also important to observe whether the synchronization of the voice with the lip movements is natural and whether there are no strange items or distortions in the background.